Quality of Service (QoS): How to Maximize Network Efficiency

Quality of Service (QoS) is a methodology for prioritizing network traffic to ensure optimal performance for critical applications. It is a way to manage bandwidth and minimize unnecessary network usage, which reduces latency and keeps essential services running smoothly, maintaining productivity and ensuring a better user experience.

At your company, for example, you might want video conferencing to take priority over large file downloads to prevent frustrating interruptions during team meetings.

QoS isn't only for large enterprises. Even small businesses can benefit. For instance, a small company that relies heavily on cloud-based software might configure QoS to ensure that critical SaaS applications always perform well, even if someone else in the office is streaming a high-definition video.

Another real-world example is in remote work scenarios. A remote worker might use a SASE solution that includes QoS to ensure their VPN connection gets the necessary bandwidth for seamless remote desktop performance.

Implementing QoS in modern networks

The rise of SASE architectures has heightened the need to implement QoS, which entails classifying and prioritizing traffic. Imagine you're a network engineer setting up QoS for your organization's network. You might use specific rules to prioritize voice and video traffic. This can be done by marking packets with tags or labels.

Let's look at a simple code snippet for configuring QoS on a Cisco router. This example prioritizes voice traffic using the Differentiated Services Code Point (DSCP) tagging:

Here, we're creating a class for voice traffic and matching it with the DSCP `ef` (expedited forwarding) tag. This voice traffic is then given 70% of the available bandwidth. The `service-policy` command applies the QoS policy to the router's interface.

QoS Protocols and Standards

As well as Differentiated Services Code Point mentioned above, there is also...

Class-Based Weighted Fair Queueing (CBWFQ)

CBWFQ allows you to allocate bandwidth to different traffic classes, ensuring fair treatment for each type. For example, wanting to ensure that video streaming gets at least 60% of the bandwidth while web browsing gets 30%.

Resource Reservation Protocol (RSVP)

RSVP reserves resources across an IP network and helps maintain a consistent QoS level. Although less common in modern SASE implementations, RSVP can still be handy in certain scenarios.

The above command enables RSVP on a specific interface, allowing it to reserve the necessary bandwidth for critical applications.

IEEE 802.1p

IEEE 802.1p defines a traffic prioritization system on Ethernet networks, using VLAN tags to indicate priority levels. Each packet gets a tag the network hardware respects, ensuring higher-priority traffic bypasses lower-priority packets.

Integrated Services (IntServ)

IntServ guarantees that services like streaming video and audio can reach their destinations without interruptions. It provides a fine-grained QoS system, which is often contrasted with DiffServ's coarse-grained control.

Every router in the IntServ system must implement its protocols. When an application requires a specific QoS guarantee, it must make an individual reservation. This reservation is described by two components: Traffic SPECification (TSPEC) and Request SPECification (RSPEC).

TSPECs define what the traffic looks like. This mechanism controls the average traffic flow rate and the allowed 'burstiness' of traffic. For example, a video with a refresh rate of 75 frames per second, where each frame takes 10 packets, might have a token rate of 750 Hz and a bucket depth of 10. This bucket depth accommodates the burst of sending an entire frame at once.

Conversely, RSPECs specify the flow's requirements. It can be the usual internet 'best effort', which requires no reservation. This mode is typical for web pages and FTP applications. The 'Controlled Load' setting mimics a lightly loaded network, which may have occasional glitches but generally maintains a constant delay and drop rate.

However, for IntServ to work, all routers in the traffic path must support it and store several states. Thus, IntServ is feasible on a small scale but becomes resource-intensive as the system scales to larger networks or the Internet.

Multiprotocol Label Switching (MPLS)

MPLS directs data from one node to the next based on short path labels rather than long network addresses. This reduces the complexity and improves the speed of the data transfer process.

MPLS is especially effective at prioritizing traffic. For example, it can assign IP addresses and specify the range for MPLS labels and then create a class map for VoIP traffic and a policy map to prioritize. Applying the policy map to the interface ensures that VoIP traffic gets the necessary bandwidth.

Quality of Service is critical in environments where different services share the same network infrastructure. MPLS and QoS together ensure that time-sensitive data gets sufficient bandwidth.

Quality of Service (QoS) use cases

Bandwidth management

QoS helps manage bandwidth, ensuring smooth and efficient data flow. Imagine streaming a high-definition video conference while someone else downloads a large file. Without proper bandwidth control, that call could turn into a pixelated mess.

Suppose we're using a SASE solution that supports QoS policies. We can create specific rules to prioritize different types of traffic. VoIP traffic can get a generous bandwidth limit, with video streaming getting a medium priority and file downloads classified as the least important. This ensures critical communications remain uninterrupted, even when the network is under heavy load.

Additionally, SASE can leverage advanced techniques like traffic shaping and load balancing. These techniques distribute network resources efficiently. For example, if multiple branch offices are accessing the same cloud application, load balancing can help distribute the load evenly to prevent any single point from becoming a bottleneck.

If one link is congested, traffic can be rerouted through another less congested path, ensuring optimal performance. With SASE, these adjustments can also be automated, which removes the burden of manual tuning.

Latency mitigation

Latency can significantly affect the overall user experience. QoSv allows you to segment and prioritize your traffic, which boosts network speed and efficiency for critical applications.

Achieving low latency and high QoS in SASE is also about real-time monitoring and adaptive adjustments. For instance, if our network monitoring tools detect congestion at a particular edge location, we might dynamically reroute traffic through a less congested path.

Jitter reduction

Jitter is the variation in packet arrival times. It can seriously affect real-time communication like VoIP or video conferencing. You can leverage QoS policies to mitigate jitter and ensure a smooth network experience.

Sometimes, you may need to handle multiple QoS policies for different types of traffic. For example, you might prioritize VoIP and also video conferencing tools like Zoom or Microsoft Teams.

Packet Loss prevention

Packet loss occurs when data packets traveling across a network fail to reach their destination. This can lead to poor performance, especially in real-time VoIP or video conferencing applications.

To mitigate packet loss, we can use techniques like Forward Error Correction (FEC) or Packet Loss Concealment (PLC). These methods help recover or conceal lost packets.

Traffic shaping

Traffic shaping entails controlling data flow to reduce latency, jitter, and packet loss. Imagine you are running a VoIP application, and a big file download starts. Traffic shaping allows you to prioritize VoIP traffic over the download, ensuring smooth conversations.

We typically do this by categorizing different types of traffic and applying policies to them. Below is a sample configuration snippet prioritizing video conferencing traffic by giving it higher precedence over general web surfing. Let's assume we're using a common SASE platform:

In the example above, VoIP traffic is assigned high-priority QoS, while general web surfing traffic receives normal priority. This ensures that critical applications like VoIP get the bandwidth they need, even during peak usage times.

Both traffic shaping and QoS are configured and enforced from the cloud in a SASE architecture. This centralized approach makes it easier to adapt and respond to changing network conditions on the fly. It ensures that you can maintain performance without manually configuring individual network devices.

Because network conditions can change, what worked yesterday might not work today. Therefore, QoS policies need to be carefully planned and constantly monitored. It is crucial to use tools that can provide real-time analytics and enable easy and quick adjustments.

Also, remember that QoS configurations can vary depending on your network equipment and your organization's specific requirements.

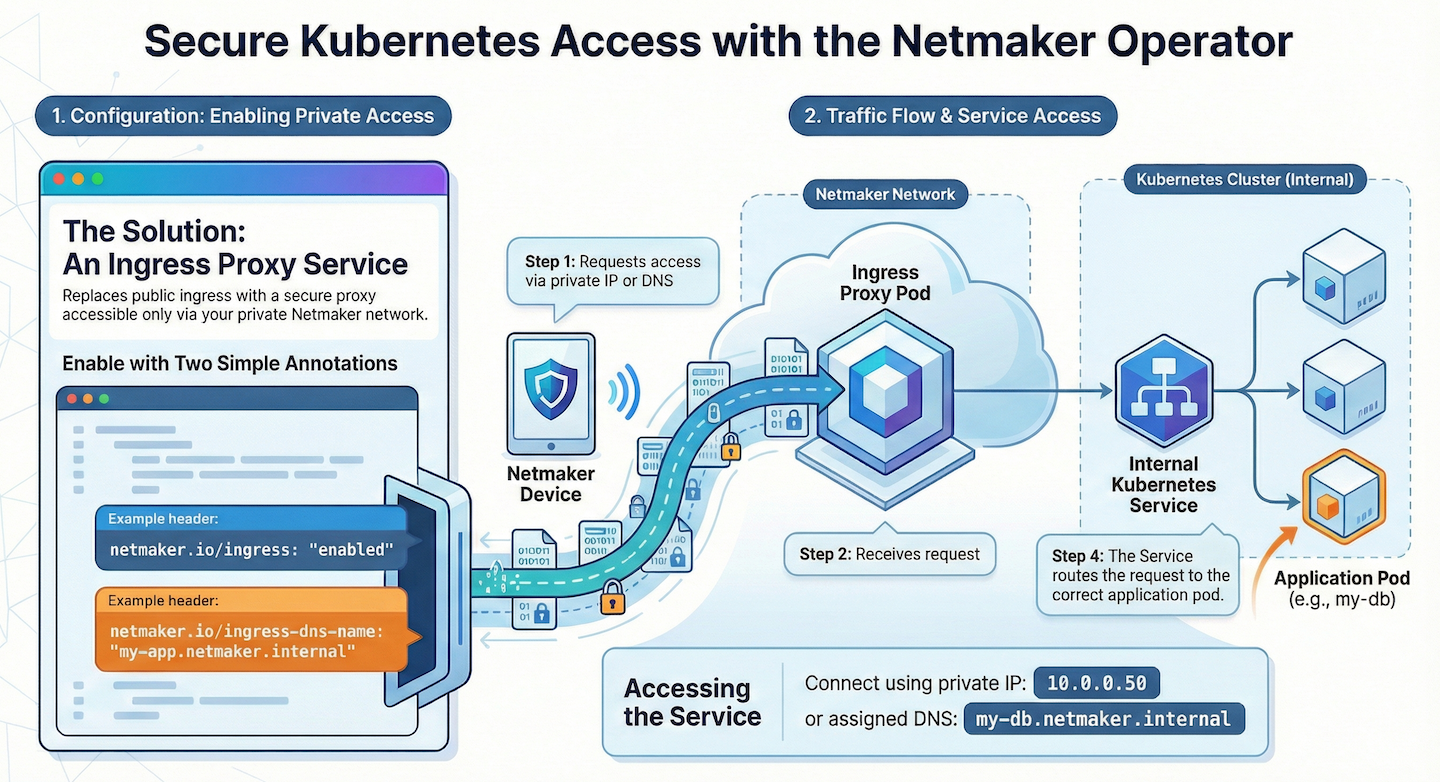

Enhancing Network Efficiency with Netmaker

Netmaker offers a robust solution for optimizing network efficiency through its advanced mesh networking capabilities. By leveraging Netmaker's WireGuard-based VPN technology, businesses can ensure secure and high-performance connectivity across distributed environments. This is particularly beneficial for implementing Quality of Service (QoS) strategies, as Netmaker allows for seamless prioritization of network traffic. With its ability to manage complex network architectures effectively, Netmaker ensures that critical applications like video conferencing and SaaS platforms receive the necessary bandwidth, minimizing latency and ensuring uninterrupted service delivery.

Furthermore, Netmaker's flexibility in deployment—whether on virtual machines, bare metal, or within containerized environments like Docker and Kubernetes—means that it can be integrated into existing infrastructure with ease. Its compatibility with modern network architectures makes it an ideal choice for businesses looking to enhance their QoS implementations. By maintaining control over network interfaces and firewall rules, Netmaker provides a reliable framework for managing network traffic efficiently. To explore how Netmaker can improve your network's QoS and overall performance, sign up and get started today.

.svg)

.svg)