Observability vs Monitoring: Differences & Benefits

Monitoring and observability are crucial concepts for managing company networks, but they serve distinct purposes. While monitoring is excellent for detecting known problems, observability allows companies to diagnose and troubleshoot unknown, emergent network behaviors, making it essential in dynamic, complex environments. This article highlights the differences between monitoring and observability and their benefits for IT networking.

What is monitoring?

Monitoring refers to the process of collecting and analyzing data to ensure your systems are running smoothly. It entails keeping an eye on key metrics, like server uptime, CPU usage, memory utilization, and network traffic.

For instance, if your web server's CPU usage suddenly spikes to 100%, monitoring tools can alert you before the system crashes.

Monitoring tools like Nagios or Zabbix can help you track the health of your infrastructure. They can be set up to ping devices, check service statuses, and even keep an eye on environmental factors like temperature in a server room.

Let's say you're responsible for an e-commerce website. Monitoring would involve tracking page load times and transaction success rates. If page loads slow down, you might get an alert that prompts you to check server load or database performance. This immediate feedback helps you react quickly to potential issues, reducing downtime and improving user experience.

Monitoring isn't just about catching problems as they happen. It's also about collecting long-term data to identify trends. For example, by analyzing CPU load over several months, you might notice that your servers are under heavy use during specific times of the day. With this information, you can plan your capacity more effectively, perhaps by adding more resources during peak hours.

However, traditional monitoring focuses on known issues. You set thresholds and rules based on your understanding of the system. It’s like having a checklist that you follow.

If everything looks good on the checklist, you assume the system is healthy. But what happens when there's an issue we didn't anticipate? This is where monitoring alone might fall short.

Key components of monitoring

Metrics collection

You can also use tools to gather data points like CPU usage, memory utilization, disk space, and network traffic. Let's say you are using Nagios or Zabbix. These tools constantly check your servers and devices for these metrics. So, if your database server's memory usage starts creeping up, you will know about it before it crashes.

Alerting

Metrics alone aren't enough. You need to be notified when something goes wrong. For instance, if your web server's CPU usage spikes to 100%, you can't be sitting there, staring at a dashboard all day.

Monitoring tools can be configured to send out alerts—email, SMS, or even push notifications—to get your attention. This immediacy means you can jump on issues as they arise, minimizing downtime and keeping your systems running smoothly.

Logging

Detailed logs can tell you a lot about what's happening under the hood. If an application crashes, logs can help you pinpoint the cause.

For example, if your ecommerce site's transaction success rate suddenly dips, the logs might show a database connection error or a failed API call. Having these logs handy can save you from playing detective, allowing you to resolve issues more quickly.

Visualization

Raw data is great, but visualizing it makes spotting trends and anomalies much easier. Tools like Grafana can take your monitoring data and turn it into graphs and dashboards.

Imagine having a dashboard that shows server load, network traffic, and error rates—all in real-time. This kind of visualization helps you get a quick sense of overall system health.

Historical data analysis

This goes beyond just looking at real-time data. By analyzing historical data, you can identify patterns and trends. For example, if you notice that your servers are consistently under heavy load every Monday morning, you can plan for it.

Maybe that means adding more resources to handle the load or optimizing existing ones. This proactive approach helps you stay ahead of potential issues.

All these components work together to give you a comprehensive view of your network's health. But remember, traditional monitoring focuses on known issues, like following a checklist. If everything looks good on that list, you assume all is well. However, this approach might fall short if you encounter an unanticipated problem.

What is observability?

Observability goes beyond monitoring by focusing on understanding the internal state of your systems based on the data they produce. While monitoring involves keeping an eye on predefined metrics, observability is about gaining insights, especially into issues you didn't anticipate. It's like having x-ray vision for your systems.

Let's imagine you are running that same ecommerce website. With observability, you wouldn't just track page load times and transaction success rates. You would dig deeper.

For example, if users are experiencing slow checkout times, monitoring might alert you to increased server CPU usage. But observability lets you correlate this spike with recent code changes or third-party API latencies, giving you a more comprehensive understanding of what's really happening.

To achieve observability, you rely on three pillars: metrics, logs, and traces. You may already collect metrics for monitoring, but for observability, you need richer and more contextual data. Think about logs in finer detail.

Rather than just recording errors, you would capture contextual information about user actions, database queries, and configuration changes. This way, when tracking down an issue, you get a full picture of the contributing factors.

Traces are another key component. Tracing involves following a request as it travels through various services in your network. Imagine a user making a purchase on your site.

A trace helps you see each step of that transaction—how it moves from the web server to the payment gateway and back. If there's a delay, a trace can pinpoint exactly where it's happening. Maybe the payment gateway is slow, or perhaps your database is overwhelmed. Tracing uncovers these nuances.

Observability also encourages a culture of proactive investigation. Rather than waiting for alerts, you explore your data, asking open-ended questions.

For example, you might wonder, "How does the load on our database fluctuate during major sales events?" Using observability tools, you can visualize and analyze these patterns, enabling you to optimize your infrastructure before the next big sale.

So, while monitoring helps you track the health of your systems through predefined checks, observability gives you the tools to understand the "why" behind issues, especially those you didn't expect. This deeper insight helps you create more resilient and efficient systems.

Key components of observability

Metrics

Unlike traditional monitoring, we look at more than just CPU usage or memory stats. You need granular data. For instance, instead of just tracking overall server load, you capture specific metrics like "time spent querying the database" or "latency per API call."

This level of detail helps you identify inefficiencies and bottlenecks that general metrics might miss. If you are running your e-commerce site again, you wouldn't just track transaction counts; you would also monitor how long each transaction step takes, from cart to checkout.

Logs

With observability, logs aren't just about errors. They tell a story. You capture contextual information, like user actions, specific database queries, and even feature flags.

Let's say users are having issues with a new feature. Detailed logs can help you see if the problem is linked to a particular user behavior or a specific configuration.

Using tools like the ELK stack (Elasticsearch, Logstash, Kibana), you centralize these logs. Rather than sifting through logs spread across servers, you can query them in one place, making it easier to spot patterns.

Traces

Another cornerstone, tracing allows you to follow a request's journey through your system. Suppose a customer is placing an order. With tools like Jaeger or Zipkin, you trace that action through each microservice it touches—web server, payment gateway, database, and so on.

If the process slows down, the trace pinpoints exactly where the lag is happening. Maybe the payment service is delayed or the database is underperforming. Tracing provides that visibility.

Contextual information

You don't just look at raw data. You enrich it with context. For example, when logging database queries, you also log which user performed the action and which feature was being used. This helps you see the bigger picture.

If your ecommerce site's discount codes fail intermittently, enriched logs might show that the failures coincide with a specific application's version or happen only during high-traffic periods.

Correlation

Observability isn't just about collecting data; it's about making connections. Take your e-commerce example: if your checkout page is slow, you don't just see server CPU spikes.

You correlate those spikes with recent code deployments, third-party API latencies, or even unusual user behavior. This correlation helps you understand not just what's wrong, but why it's happening.

Exploration

Rather than waiting for alerts, you proactively investigate. You ask questions like, "How does traffic vary during sales events?" or "What impact does a new feature have on server load?"

Using observability tools, you dive into the data, looking for trends and answers. This proactive stance helps you optimize your systems before issues arise.

Key differences between observability and monitoring

While monitoring and observability both aim to help you manage your systems, they do so in fundamentally different ways. Monitoring is about tracking predefined metrics to keep an eye on system health.

Observability, on the other hand, digs deeper. It's about understanding the internal state of your systems based on the data they produce. It gives you the context you need to diagnose issues you didn't anticipate.

Another key difference is in how you handle logs. In monitoring, logs are often used to capture errors. If your application crashes, you look at logs to find the issue. With observability, logs tell a much richer story. You capture contextual details like user actions, feature flags, and database queries.

Imagine users are reporting intermittent issues with applying discount codes. Observability means you can correlate these logs with user sessions, times of day, and recent deployments, giving you a fuller picture.

Metrics themselves are also more granular in observability. While monitoring might track overall server load, observability would dig into specifics like "time spent querying the database" or "latency per API call." This granularity helps you identify inefficiencies that general metrics might miss.

For instance, if checkout times are slow, observability lets you see if the issue is with the cart, payment processing, or confirmation steps.

The culture around these practices is different too. Monitoring is reactive. You set thresholds and wait for alerts. Observability encourages proactive investigation. Instead of waiting for something to break, you explore your data to find potential issues before they become problems.

You might ask, "How does user traffic vary during sales events?" and use observability tools to dive into the data, spotting trends that monitoring might overlook.

So, while monitoring keeps you informed through predefined checks and thresholds, observability gives you the complete picture, enriched with context and correlations. This deeper insight allows you to build more resilient, efficient systems.

Benefits of monitoring in company networks

Provides real-time alerts

When something goes wrong—like a server's CPU usage reaching 100%—you want to know instantly. This keeps small issues from snowballing into bigger problems.

For example, if your web server starts to experience high CPU load during a holiday sale, monitoring tools can send you alerts before the server crashes. This allows you to act quickly, ensuring your e-commerce site remains available to customers.

Consistent overview of your network's health

With monitoring, you continuously track metrics like server uptime, CPU usage, memory utilization, and network traffic. This continuous surveillance means you don't have to guess whether your systems are performing well; you know.

For instance, if your database server shows a gradual increase in memory usage over weeks, monitoring lets you catch this trend early. You can then allocate more resources or optimize existing ones to avoid a potential crash.

Simplifies compliance and auditing

By keeping detailed logs and regular snapshots of system performance, you create an audit trail. This is valuable for compliance with industry regulations or internal policies.

Let's say you are in the finance sector, where regulations require you to maintain a certain level of system availability. Monitoring provides the documentation needed to prove compliance, saving you headaches during audits.

Helps you plan for capacity

By analyzing long-term data trends, you can predict future needs and make informed decisions about scaling your infrastructure. If you notice that your servers experience peak loads every Monday morning, you can prepare for this by adding more resources ahead of time. This proactive capacity planning ensures you maintain optimal performance even during high-demand periods.

Streamlines your incident response process

When an alert is triggered, your team knows exactly what metrics are out of bounds. This focused information helps narrow down potential causes quickly, reducing the time spent diagnosing issues.

For example, if your web server goes down, monitoring can show whether the problem is due to disk space running out, high CPU load, or network issues. This targeted approach accelerates your troubleshooting process, getting your systems back online faster.

Overall, while monitoring may be more reactive compared to observability, its real-time alerts, consistent network health overview, compliance simplification, capacity planning, and streamlined incident response make it an essential tool for managing your company networks effectively.

Benefits of observability in company networks

Deeper understanding of your system’s behavior

With traditional monitoring, you might get an alert when your web server's CPU usage spikes. But observability lets you dig into the "why" behind this spike. You can correlate this with recent code deployments or increased user activity, providing a more comprehensive picture.

Ability to proactively explore and optimize your systems

Instead of waiting for something to go wrong, observability encourages you to dig into your data and ask questions. For example, you might wonder how your servers handle traffic during major sales events.

By exploring your logs, traces, and metrics, you can identify patterns and make optimizations before the next big sale hits. This proactive approach helps you stay ahead of potential issues, turning insights into preventive actions.

Enhances your troubleshooting capabilities

Imagine users are reporting intermittent errors when applying discount codes. With observability, you can centralize your logs using tools like the ELK stack.

Then, you can search these logs to find correlations between the errors and specific user actions, times of day, or application versions. This rich context helps you understand the root cause of the problem, allowing you to fix it more efficiently.

Boosts efficiency

By providing granular data and detailed insights, observability makes our troubleshooting process quicker and more effective. Instead of sifting through general metrics and vague alerts, you get precise information about what's going wrong and why. This means less downtime and a better user experience overall.

Ultimately, observability fosters a culture of continuous improvement. By regularly exploring your data and understanding your system's behavior more deeply, you can make informed decisions that enhance performance, reliability, and user satisfaction. Observability turns data into actionable insights, helping you build more resilient and efficient company networks.

Netmaker's Role in Enhancing Observability and Monitoring

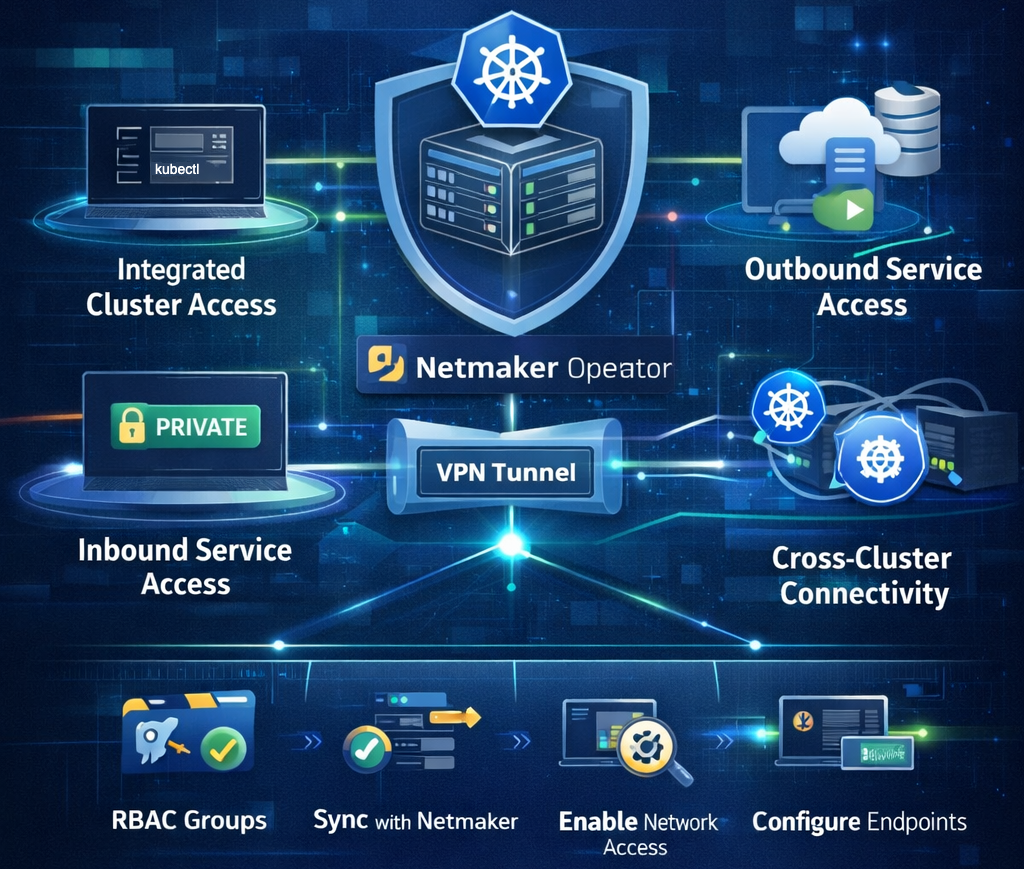

Netmaker, a powerful networking tool, can significantly enhance both monitoring and observability in IT environments. By facilitating seamless and secure connections across distributed networks, Netmaker ensures real-time data flow, which is essential for effective monitoring. Its capabilities allow organizations to deploy a robust network architecture that supports the rapid detection of known issues, thanks to its integration with containerized environments such as Docker and Kubernetes. This integration enables the collection and analysis of key metrics like CPU usage and network traffic, which are vital for maintaining optimal network performance.

Beyond traditional monitoring, Netmaker's advanced features support improved observability by providing deeper insights into network behaviors and interactions. With its ability to modify interfaces and set firewall rules using iptables, Netmaker enhances visibility into network operations, making it easier to diagnose and troubleshoot unknown issues. The platform's flexibility, whether deployed on a single server or within a dedicated networking environment, ensures that IT teams can adapt to complex and dynamic network conditions effectively. To experience these benefits and improve your network's monitoring and observability, get started with Netmaker today.

.svg)

.svg)