What is Container as a Service (CaaS)?

Containers as a Service (CaaS) is a cloud service model that allows you to manage and deploy containerized applications at scale. It is a middle ground between Infrastructure as a Service (IaaS) and Platform as a Service (PaaS). With CaaS, you can run containerized applications using container-based virtualization.

How CaaS works

CaaS packages your app and all its dependencies into containers. These containers run consistently across different environments, whether it's your local machine, a staging server, or a production environment. It's like having a portable, lightweight version of your app that can run anywhere.

Popular examples of CaaS include services like Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), and Microsoft Azure Kubernetes Service (AKS). With these platforms, you can automatically scale your applications based on demand.

CaaS can spin up additional containers to handle the load if your app suddenly gets a surge in traffic. And when the traffic dies down, it can scale back down, saving you resources and money.

Using CaaS also simplifies the process of updating and deploying your applications. Are you rolling out a new version? You can deploy it into containers and test it without affecting the currently running version. If everything works well, you switch the live traffic to the new version. If something goes wrong, you can easily roll back to the previous version.

CaaS also boosts security. Since containers isolate applications from each other and the host system, vulnerabilities are less likely to spread. You can also use tools to scan your container images for vulnerabilities before deploying them. This makes your deployments more secure and gives you peace of mind.

Overall, CaaS offers a powerful way to manage and scale your applications using containers. It's flexible, efficient, and simplifies many aspects of deployment and management. Whether you are deploying a single app or running dozens of microservices, CaaS can make the process smoother and more reliable.

Difference between CaaS, IaaS, and PaaS

To understand how CaaS works and to apply it correctly, it's useful to understand how it fits into the landscape alongside Infrastructure as a Service (IaaS) and Platform as a Service (PaaS).

IaaS gives you the basic building blocks like virtual machines, storage, and networks. It's highly flexible but leaves a lot of the management up to you. Think of Amazon Web Services EC2 or Google Compute Engine. You get virtual servers and you can run anything on them, but you have to handle the OS, runtime, and everything else.

PaaS, on the other hand, abstracts much of that away. It provides a platform you can use to build and deploy applications without worrying about the underlying infrastructure. Google App Engine or Heroku are good examples here.

With PaaS, you just focus on your code and the platform takes care of the rest. It's convenient but less flexible because you are often locked into the provider's ecosystem and tools.

CaaS sits in between these two. It offers more flexibility than PaaS but with more automation and less manual management than IaaS. With CaaS, you manage and deploy containers rather than full virtual machines. It's like getting a pre-configured environment where you can run your containerized applications.

For instance, if you’re using GKE, you can spin up a Kubernetes cluster and deploy your containers. The platform handles the orchestration, scaling, and more. You don't need to manage the underlying virtual machines directly, but you still have more control compared to using a pure PaaS solution.

Let's say you have a microservices architecture. With CaaS, each microservice can run in its own container. You get the benefits of container orchestration, automatic scaling, and easy updates. This simplifies your operations compared to managing VMs with IaaS, where you would need to set up and configure everything yourself.

On the other hand, unlike PaaS, you are not limited to specific languages or frameworks. You can use any stack, as long as it runs in a container. This gives you the freedom to choose the best tools for your needs while still benefiting from the managed services the CaaS platform provides.

In short, CaaS gives you a balanced mix of control and convenience, making it a powerful option for managing modern, containerized applications.

What is a container?

A container is like a self-contained unit of software. Imagine you have an application with its dependencies, libraries, and configuration files. Instead of worrying about whether this app will run on different environments, you package everything into a container. This container then runs consistently across any environment, from your local machine to the cloud.

Containers are lightweight. Unlike virtual machines (VMs), they don't need a full operating system. They share the host system's OS kernel but remain isolated from each other. This isolation is crucial for security and consistency.

For example, if you run a Python app in a container on your laptop, it will behave the same way when you deploy it to a cloud platform like Google Kubernetes Engine (GKE) or Amazon Elastic Kubernetes Service (EKS).

Using Docker, a popular containerization tool, you can create these containers easily. Imagine you've developed a Node.js web app. You write a Dockerfile that specifies the base image (like Node.js), copies our app's code into the container, and sets the command to start the app.

Running `docker build` will package everything into a container image. This image is a blueprint for your container and can be stored in a container registry like Docker Hub or Google Container Registry.

When it’s time to deploy, you use `docker run` to create a container instance from this image. The app runs in its isolated environment, with all its dependencies already included. It’s like magic. You don't worry about missing libraries or conflicting versions.

This portability is fantastic. You might have a staging server running a different OS than your development machine. By using containers, you ensure your app runs the same way everywhere. This makes it easier to catch bugs early in the development cycle.

Now, consider scaling. If your Node.js app starts receiving more traffic, you just spin up more container instances. With tools like Kubernetes, this scaling can be automatic. Kubernetes, available as a managed service in GKE, EKS, and Azure Kubernetes Service (AKS), handles orchestration. It decides how many containers to run and on which nodes, balancing the load efficiently.

Another benefit of containers is their small footprint. They start up quickly compared to VMs, saving resources and time. This is especially important for large applications split into microservices.

Each microservice runs in its own container, allowing independent scaling and updates. If you need to roll out a new version of one microservice, you update its container image and redeploy, without touching the other services.

Security is also enhanced with containers. Since each container is isolated, a vulnerability in one doesn't necessarily affect others. You can use tools like Clair to scan your container images for known vulnerabilities before deploying them, adding an extra layer of security.

In essence, containers simplify many aspects of application deployment and management. They're portable, lightweight, and consistent. Whether you are developing a small app or a complex system of microservices, containers make your lives easier and your deployments more reliable.

Key components of CaaS

Container orchestration engine

This is the heart of any CaaS platform. Kubernetes is the most popular choice here. It's what Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), and Microsoft Azure Kubernetes Service (AKS) use.

Kubernetes handles the scheduling and deployment of your containers. It makes sure they run smoothly across a cluster of machines.

For example, if you need to deploy a new version of your app, Kubernetes can roll it out gradually to minimize downtime. It also takes care of scaling. If your app gets a sudden spike in traffic, Kubernetes can spin up more container instances automatically.

Container registries

These are like libraries for storing and sharing container images. Docker Hub is a popular public registry, but services like Google Container Registry or Amazon Elastic Container Registry (ECR) offer private, secure options.

When you build a container image, you push it to a registry. This makes it easy to pull the image and deploy it wherever you need. For instance, you might develop a new feature, build the container image, and push it to your registry. From there, your Kubernetes cluster can pull the image and deploy it.

Networking

Containers need to communicate with each other and with the outside world. In a Kubernetes environment, this is handled by networking plugins like Calico or Flannel. These tools manage the network policies and routes, ensuring your containers can talk to each other securely and efficiently.

For example, if you have a front-end service and a back-end service running in different containers, the networking layer ensures they can exchange data without issues.

Storage solutions

While containers are ephemeral and can disappear at any time, your data often needs to persist. Kubernetes integrates with various storage providers like Amazon EBS, Google Persistent Disks, or Azure Disks.

You define persistent volume claims (PVCs) in your Kubernetes configuration, and the platform attaches the necessary storage to your containers. This ensures that your databases and other stateful services can store data reliably.

Security

Container isolation ensures that a compromise in one container doesn't affect others. However, you also need to scan your container images for vulnerabilities. Tools like Clair or Aqua Security can help with this. They scan your images before deployment, flagging any known security issues.

Additionally, Kubernetes provides role-based access control (RBAC). This means you can specify who has access to what resources, adding another layer of security.

Monitoring and logging

You must know what's happening with your containers at all times. Kubernetes integrates with monitoring tools like Prometheus and Grafana to collect metrics and visualize them.

Logging tools like Fluentd and Elasticsearch can gather logs from your containers, making it easier to debug issues. For example, if a container crashes, you can quickly check the logs to diagnose the problem and take corrective action.

Tese components—container orchestration, registries, networking, storage, security, and monitoring—work together to make CaaS a robust and efficient model for managing your containerized applications. They simplify many of the complexities involved, allowing you to focus on building and deploying our applications with confidence.

Benefits of CaaS for Company Networks

Scalability

Imagine you have a web app that suddenly goes viral. The traffic spikes, and you need more resources to keep everything running smoothly. With CaaS platforms like Google Kubernetes Engine (GKE) or Amazon Elastic Kubernetes Service (EKS), scaling up to meet this demand is straightforward.

Let's say your application consists of several microservices running in containers. If you notice a surge in user activity, Kubernetes can automatically spin up additional instances of these containers to handle the load.

This auto-scaling feature monitors the performance and health of your containers, adjusting the number of instances dynamically. This means you don't have to scramble to add resources manually when traffic increases.

For example, suppose your Node.js-based front-end service starts receiving double the usual number of requests. Kubernetes can detect the increased load and automatically deploy more container instances to manage the traffic. As a result, your users experience seamless performance without slowdowns or downtime.

CaaS also supports horizontal scaling across multiple nodes. If your application needs more computing power than a single node can provide, Kubernetes can distribute your containers across several nodes in the cluster.

This not only boosts performance but also improves fault tolerance. For example, if one node crashes, Kubernetes can redistribute the containers to other healthy nodes, ensuring continuous service availability.

Another scenario that highlights the scalability benefits of CaaS is during scheduled high-load events. Suppose you are an e-commerce site gearing up for a big sale. You can preemptively scale up your resources to handle the anticipated traffic.

Kubernetes makes this easy by allowing you to configure and deploy additional containers in advance. Once the sale ends, you can scale back down effortlessly.

Cost efficiency

Using Containers as a Service (CaaS) can significantly improve your cost efficiency. One major factor is resource optimization. Containers are lightweight compared to virtual machines (VMs). They share the host operating system, which means you can run more containers on a single piece of hardware than you could VMs.

This higher density translates to lower infrastructure costs. For example, instead of deploying dozens of VMs for each microservice in our application, you can run these microservices in containers on just a few nodes. This maximizes your hardware utilization and reduces your overall spend.

Another way CaaS saves you money is through auto-scaling. Think about your web app during peak times, like Black Friday for an e-commerce site. With Kubernetes, you can automatically scale out additional container instances to handle the increased load.

Once the rush is over, Kubernetes scales down the instances, ensuring you are not paying for unused resources. This elasticity means you only pay for what you use, when you use it. There's no need to over-provision resources "just in case" anymore.

Moreover, the speed of deployment and start-up time for containers helps reduce costs. Traditional VMs can take minutes to boot up, but containers start almost instantly. If you face a sudden spike in traffic, you can respond quicker with containers, maintaining performance without needing excessive standby infrastructure. Quicker response times not only keep your users happy but also optimize your cloud spending.

Managed CaaS platforms like Google Kubernetes Engine (GKE) and Amazon Elastic Kubernetes Service (EKS) also offer integrated cost management tools. These platforms provide detailed insights into resource usage and costs, helping you identify areas where we can optimize.

For example, you might find that certain microservices are over-provisioned or that specific times of day see lower usage. By analyzing these patterns, you can adjust our deployments to save money.

Additionally, container reusability can lead to cost reductions in development and testing. You can use the same container images across different stages of your workflow — development, testing, staging, and production.

This consistency reduces the time and effort needed to troubleshoot environment-specific issues. Less time fixing bugs means lower development costs and faster time-to-market for new features.

Finally, using containers simplifies your infrastructure, reducing operational overhead. Managing a fleet of VMs involves maintaining numerous operating systems, applying patches, and dealing with configuration drift.

Containers, however, encapsulate everything needed to run an application, reducing the complexity of system maintenance. This streamlined management approach can lower your operational expenses by requiring fewer dedicated resources and less administrative effort.

Simplified management

Using Containers as a Service (CaaS) simplifies the management of your applications. One of the biggest advantages is the ease of deployment.

With Kubernetes, you don't need to write complex scripts or manually configure servers. You define your application’s desired state in simple YAML files. These files describe how your containers should be deployed, scaled, and updated.

For example, if you need to roll out a new version of our Node.js app, you just update the image tag in your deployment file and apply it. Kubernetes handles the rest, ensuring a smooth rollout.

Keeping applications running smoothly is also easier. Kubernetes includes built-in mechanisms for restarting containers that crash or fail.

Suppose one of your containers running a critical microservice goes down. Kubernetes will automatically spin up a new instance to replace it. You don’t have to wake up in the middle of the night to address failures manually. This kind of self-healing makes your lives much easier and ensures higher availability for your services.

Another area where CaaS shines is managing configuration and secrets. You often need to store sensitive information like API keys or database passwords securely.

Kubernetes provides a built-in system for managing these through ConfigMaps and Secrets. You can inject configuration data into your containers at runtime without baking them into your images.

For example, if your application needs access to a third-party API, you store the API key as a Kubernetes Secret and reference it in your pod configuration. This keeps your sensitive data safe and separate from our codebase.

Monitoring and logging become almost effortless with CaaS. Kubernetes integrates seamlessly with tools like Prometheus for monitoring and Fluentd for logging. You get real-time insights into your application’s performance and can quickly identify issues.

Suppose your app is running slower than expected. Prometheus metrics can help you pinpoint where the bottleneck is happening. Fluentd collects logs from all your containers, making it easier to debug problems by providing a centralized view. You don’t need to log into individual containers to hunt for errors.

Managing updates and rollbacks is another task where CaaS excels. With traditional deployment approaches, updating an application could be risky and prone to errors.

Kubernetes makes this process almost painless. You can perform rolling updates, where new versions of our containers are incrementally introduced without downtime. If something goes wrong, Kubernetes allows for easy rollbacks to a previous version.

Imagine deploying a new feature that causes unexpected behavior. With a single command, you can revert to the last stable release, minimizing user impact.

Networking and service discovery are also simplified. Kubernetes handles the complexity of networking between containers. Each service gets a stable IP address and DNS name, making inter-service communication straightforward.

For instance, if our front-end service needs to call your back-end service, it simply uses the back-end service name provided by Kubernetes. You don’t need to manage IP addresses manually or reconfigure services when scaling.

CaaS truly shifts the focus from infrastructure management to application development. It streamlines many of the tasks that used to be cumbersome, enabling you to deliver features faster and with more reliability.

Enhanced security

Using Containers as a Service (CaaS) drastically boosts your security game. One of the top perks is the isolation containers provide. Each container runs in its own environment, separate from others.

If a vulnerability is exploited in one container, it doesn't affect the others. Imagine you have a compromised front-end container. Thanks to isolation, the back-end services remain unaffected.

Container scanning is another powerful tool. Before deploying, you can scan your container images for vulnerabilities. Tools like Clair or Aqua Security come in handy here. They check your images against a database of known vulnerabilities. If a security issue is found, you can fix it before the container ever goes live. This proactive approach helps you catch problems early.

Role-based access control (RBAC) also boosts security in Kubernetes. It allows you to define who can do what within your cluster. For instance, you can restrict developers to deploying new containers but not accessing sensitive data.

This fine-grained control adds a layer of security by limiting potential damage from compromised accounts or insider threats. For example, only your admin team might have the rights to delete a service.

Managing secrets securely is crucial, and Kubernetes makes this easy. Instead of hardcoding sensitive information like API keys or passwords into your code, you use Kubernetes Secrets. These secrets are encrypted and can be injected into containers at runtime.

This method keeps your sensitive data secure and out of your source code. Suppose you need to access a database; you store the credentials as Kubernetes Secrets and access them through environment variables.

Network policies in Kubernetes also beef up your security. They allow you to control which containers can communicate with each other. For example, you can set a policy that only allows your front-end service to talk to the back-end service, but not to the database directly. This minimizes the attack surface within your cluster, making it harder for a compromised container to reach critical services.

Automatic updates and patching add another layer of security. In traditional environments, keeping the operating system and applications up-to-date can be cumbersome. With containers, you update your base images regularly and redeploy them.

Kubernetes handles the rollout, ensuring minimal downtime. This ensures that you are always running the latest, most secure versions of your software. Imagine a critical vulnerability is discovered in your base image; you update the image, push it to your registry, and Kubernetes rolls it out across the cluster.

Audit logs are also invaluable for container security. Kubernetes can record who did what and when. This audit trail is essential for compliance and forensics.

If something suspicious happens, you can quickly review the logs to understand what went wrong and take corrective actions. For instance, if an unauthorized change is made, the audit logs will show who did it, helping you to mitigate any potential damage.

Network encryption ensures data in transit is secure. You can use Kubernetes Ingress with SSL/TLS to encrypt traffic to and from your services. This means sensitive information like user data or financial transactions is protected as it moves through the network. For example, your e-commerce site's checkout process will be encrypted, safeguarding your customers' payment info.

Improved development and deployment speed

Using Containers as a Service (CaaS) significantly boosts your development and deployment speed. One of the key reasons is the consistency containers provide. When you package your application and its dependencies into a container, it runs the same way everywhere.

This eliminates those frustrating "works on my machine" issues. For instance, if you are developing a new feature on your local machine, you know it will work the same way in staging and production. This consistency speeds up your development cycle because you spend less time troubleshooting environment-specific problems.

Another advantage is the speed of container start-up times. Containers are lightweight and start almost instantly compared to virtual machines (VMs).

Imagine you are rolling out a new feature and need to scale your application to handle additional load. With VMs, you might wait several minutes for new instances to boot up. Containers, however, can be up and running in seconds. This rapid start-up time means you can respond to changes in demand much quicker, ensuring a smooth user experience.

Creating and managing your development environment is also easier with CaaS. You can define your entire stack in a `Dockerfile` and use Docker Compose to spin up a multi-container environment.

Suppose your application uses a Node.js front-end, a Python back-end, and a PostgreSQL database. You can describe all these services in a single `docker-compose.yml` file.

Running `docker-compose up` brings your whole environment to life in one command. This setup ensures that every developer has the same environment, speeding up onboarding and reducing the time spent on configuration.

Kubernetes offers features that streamline the deployment process even further. Consider rolling updates. When you deploy a new version of your application, Kubernetes can incrementally update the containers, replacing them one by one. This ensures zero downtime and minimal disruption to our users.

If something goes wrong, Kubernetes makes it easy to roll back to the previous version. You just update the deployment file with the desired image version, and Kubernetes handles the rest. This capability reduces the risk and complexity of deployments, making it faster to push new features to production.

The integration with CI/CD pipelines is another notable benefit. You can automate the build, test, and deployment stages using tools like Jenkins, GitLab CI, or GitHub Actions.

Suppose you push some new code to your repository. The CI/CD pipeline can automatically build a new Docker image, run tests, and deploy the image to your Kubernetes cluster. This automation speeds up the entire development cycle and ensures that your application is always in a deployable state. You catch bugs early and deploy fixes faster, resulting in a more efficient workflow.

Using Kubernetes ConfigMaps and Secrets also accelerates development. You can manage your configuration and sensitive data outside your application code. This separation means you can update configurations without rebuilding your container images.

Imagine you need to change the database connection string. You only update the ConfigMap or Secret, and Kubernetes injects the new value into your running containers. This flexibility allows for quicker changes and reduces the need for downtime.

Local development becomes faster, too. With tools like Docker Desktop or Minikube, you can run a local Kubernetes cluster on your machine. This setup mirrors our production environment closely. You can develop and test new features locally with confidence that they will behave the same way in production. Debugging becomes faster as you can replicate production issues in our local environment.

Therefore, CaaS removes many of the bottlenecks in traditional development and deployment workflows. From rapid start-up times and consistent environments to automated deployments and easy configuration management, it all adds up to a faster, more streamlined process. You can focus more on writing code and less on managing the complexities of deployment, pushing features to your users quicker than ever.

Enhancing CaaS with Netmaker

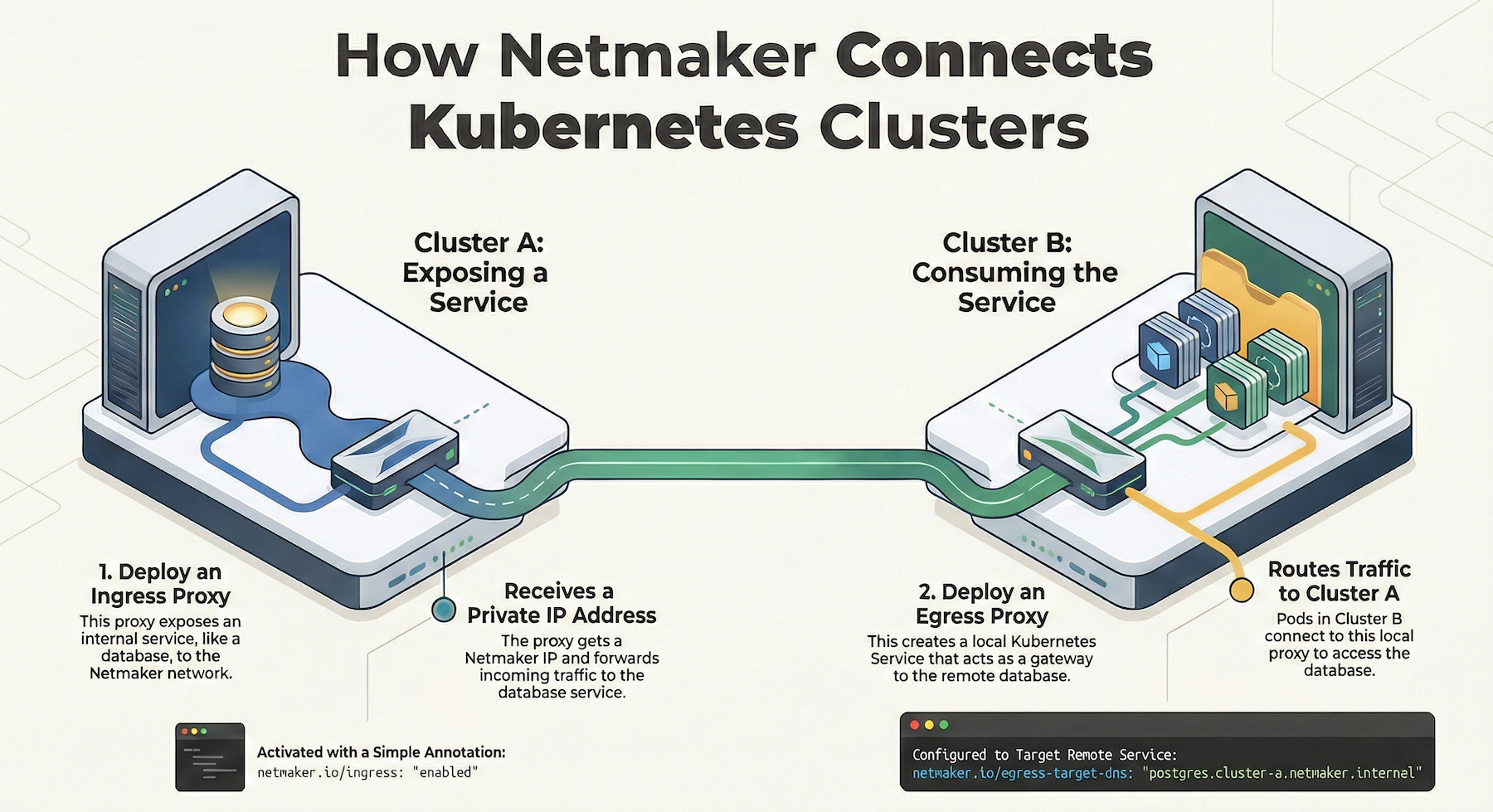

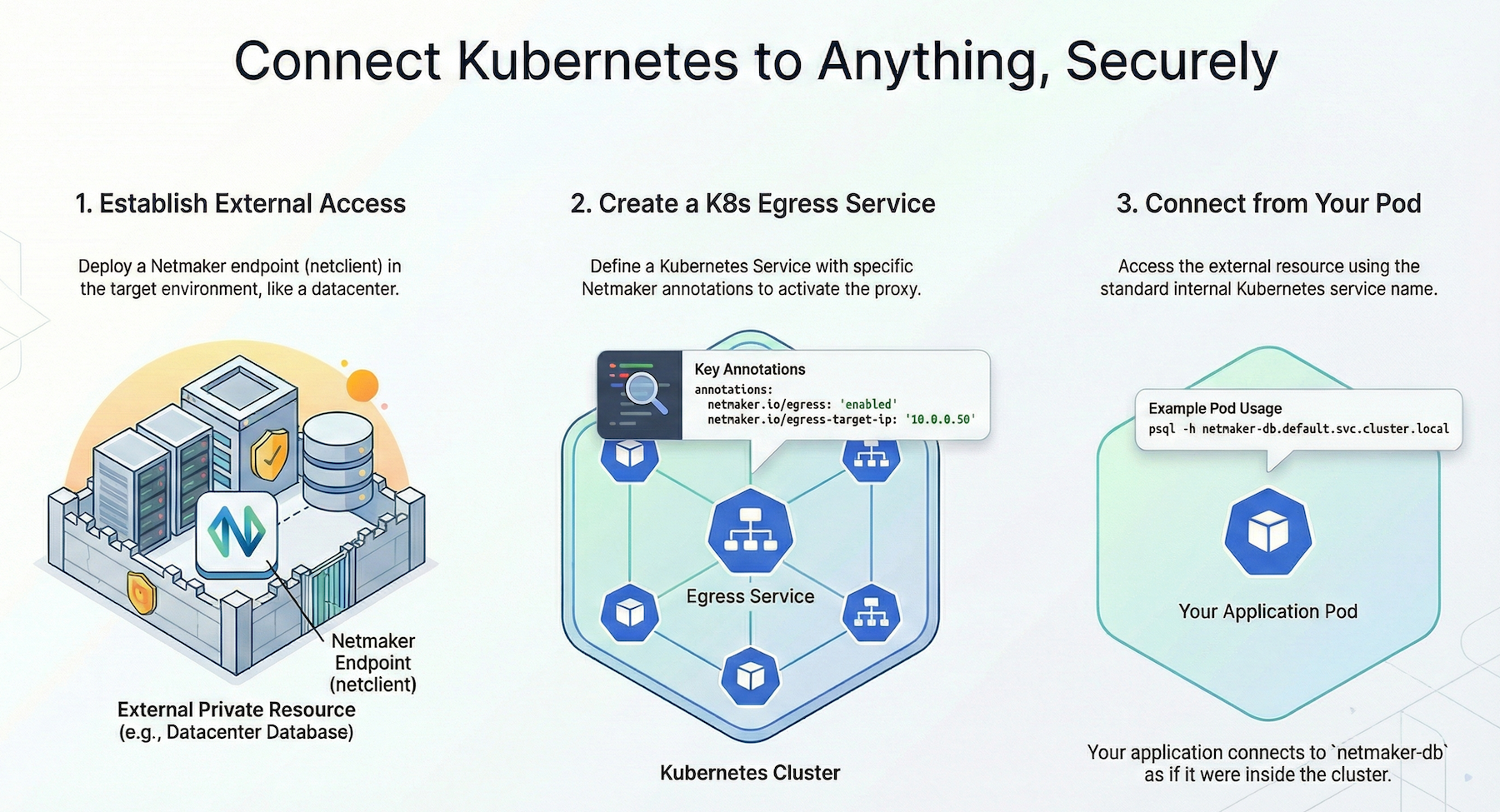

Netmaker significantly enhances the flexibility and security of Container as a Service (CaaS) platforms by providing a robust networking solution that simplifies the deployment and management of containerized applications. With features such as easy-to-setup virtual networks and automatic peer-to-peer connectivity, Netmaker allows for seamless integration across diverse environments, whether on-premises or in the cloud. This capability ensures that your containerized applications can communicate efficiently and securely, thereby optimizing performance and reducing latency.

Moreover, Netmaker's compatibility with Kubernetes and Docker makes it an ideal choice for managing container networks. By streamlining the process of setting up secure, scalable networks, Netmaker minimizes the complexities associated with manual network configurations. Its support for advanced networking features like dynamic DNS and IP management further bolsters the security and reliability of your CaaS deployments. To get started with Netmaker and take advantage of these powerful capabilities, sign up at Netmaker.

.svg)

.svg)